Hosting This Site #

Given that I’ve never needed to host a static blog, I wanted to document the configuration. Maybe someone will find this useful in the future.

AWS #

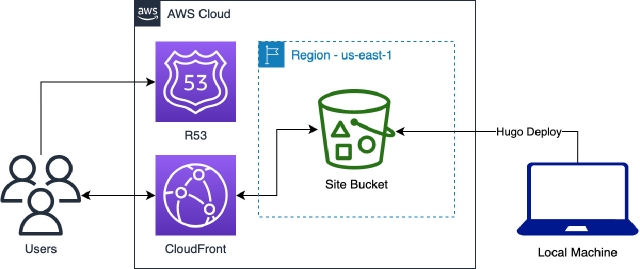

The plan is to host the site in AWS as an S3 static site. The implementation should not be difficult but there are a number of steps involved so let’s document those. In the end, the architecture will look like the following:

Fig 1: Static Deployment 🤦

Setup A Domain #

I’ll need a domain name for this beautiful site so let’s see what’s available using R53 as the registrar. Eventually, I settled on friedman.systems, which is a good shout for $21 a year.

Create Target Bucket #

S3 buckets used as static sites must match their associated domain name e.g. my bucket name will be friedman.systems. So I’ll create the new target bucket via the CLI with aws --profile iamadmin-personal-general s3 mb s3://friedman.systems and then add a bucket policy to allow public access:

{

"Version": "2012-10-17",

"Id": "Policy1678060131539",

"Statement": [

{

"Sid": "allow-read-bucket",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::friedman.systems/*"

}

]

}

Next, enable the bucket as a static host by clicking on the bucket, going to Properties > Static Website Hosting > Edit > Enable. Note the bucket website endpoint e.g. http://friedman.systems.s3-website-us-east-1.amazonaws.com.

Serve HTTPS Content Via Cloudfront #

S3 serves static sites via HTTP only and requires Cloudfront and an associated S3 origin in order to serve content over HTTPS. Navigate to Cloudfront and create a new distribution. Be sure to set the Alternate domain name (CNAME) - optional field to your registered domain e.g. friedman.systems. I enabled logging to an S3 bucket and followed

this guide for creating a new logging bucket. Importantly, the bucket needs to be ACL enabled in order to allow Cloudfront logging. I also created a Custom SSL certificate using Certificate Manager.

Point DNS To Cloudfront #

The last step on the AWS side is to create a DNS record pointing at your Cloudflare distribution. From Route 53 > Hosted zones > your_hosted_zone, create an A alias record routing traffic to an Alias to CloudFront distribution. Copy the distribution domain from Cloudfront e.g. asdf1231asdf12.cloudfront.net and paste into the Choose Distribution box. Since I didn’t need failover in this case, I chose simple routing.

Configure Hugo #

Now that I have a domain and a target bucket, I need Hugo to push directly to our S3 bucket. See the full documentation from

Hugo’s docs. Most importantly, I’ve added the following configuration section to the config.toml:

[deployment]

[[deployment.targets]]

name = "friedman.systems"

URL = "s3://friedman.systems?region=us-east-1"

Caching and TTLs #

When initially deploying or if deploying often, you may want to decrease the cache TTL especially for html files which have a higher likelihood of changing than css, etc. AWS recommends setting caching headers in the bucket directly. In my config.toml, I configured the following conditions:

# cache static formatting and scripts for 10 days

[[deployment.matchers]]

pattern = "^.+\\.(js|css|svg|ttf)$"

cacheControl = "max-age=864000"

# cache pages for 10 minutes (likely increase when stable)

[[deployment.matchers]]

pattern = "^.+\\.(html|xml|json)$"

cacheControl = "max-age=600"

# cache images for 1 year. Changing images have hashed versions

# so a long TTL is reasonable

[[deployment.matchers]]

pattern = "^.+\\.(webp|jpg)$"

cacheControl = "max-age=31536000"

Deploy #

First build the site:

❯ hugo --theme hugo-book --cleanDestinationDir

Start building sites …

hugo v0.110.0+extended darwin/amd64 BuildDate=unknown

| EN

-------------------+-----

Pages | 11

Paginator pages | 0

Non-page files | 0

Static files | 79

Processed images | 9

Aliases | 2

Sitemaps | 1

Cleaned | 0

Total in 94 ms

Then publish to the S3 bucket:

hugo deploy -v

Deploying to target "friedman.systems" (s3://friedman.systems?region=us-east-1)

INFO 2023/03/05 18:05:28 Found 108 local files.

INFO 2023/03/05 18:05:28 Found 0 remote files.

Identified 108 file(s) to upload, totaling 3.5 MB, and 0 file(s) to delete.

...

Assuming everything transfers as expected, you should be all set to load HTTPS content.